After Trump and Andrew Tate were banned off of social media platforms, it struck interesting conversations between my peers and I (Amr, Suhani, Kathan). These are some notes on what we talked about.

Why ban anyone?

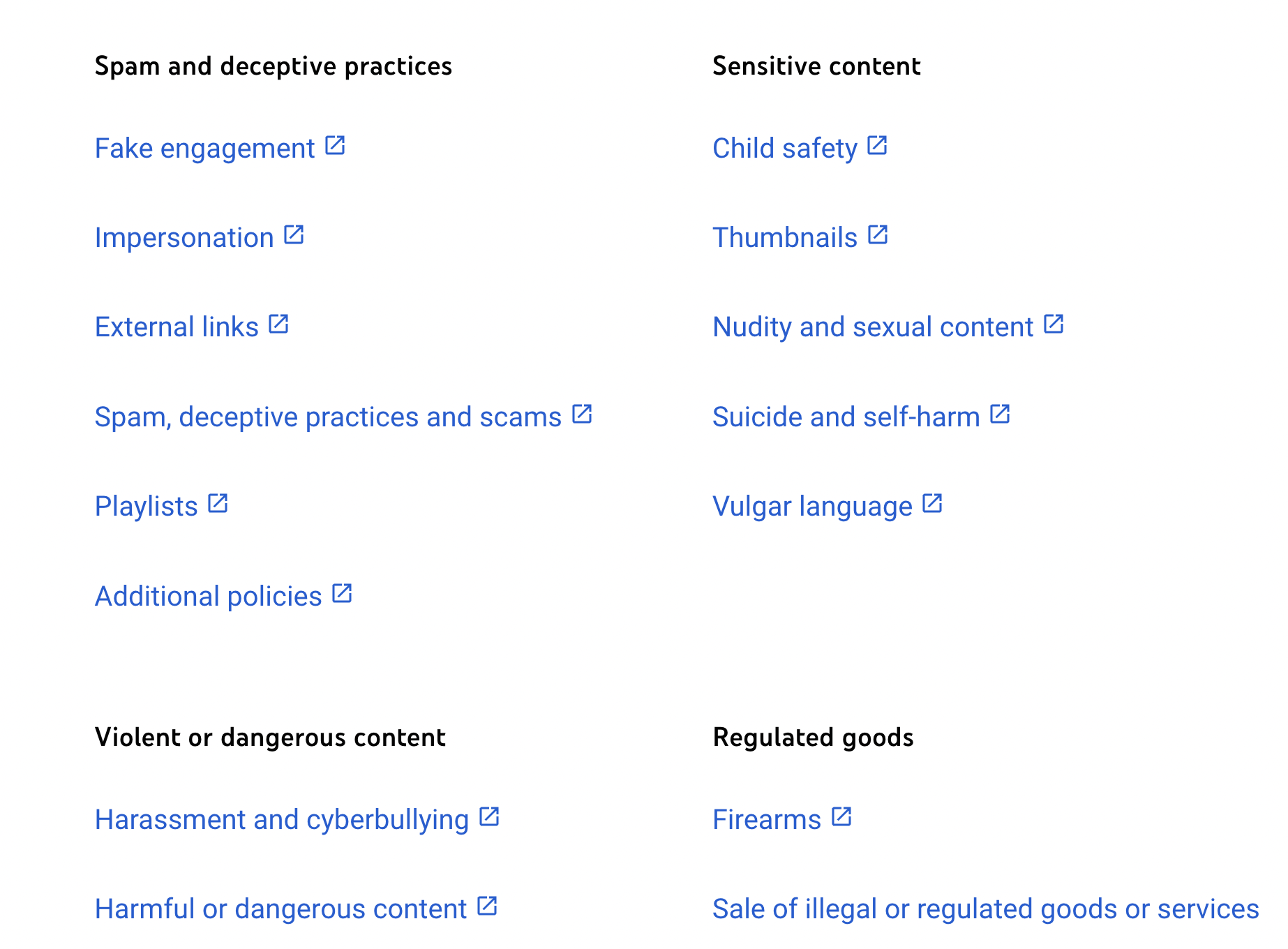

Companies like Instagram and Twitter have detailed community guidelines that overtime have contributed to the culture of the platform. Think about the “anything goes” attitude on Reddit compared to the strict censorship that occurs in Youtube. The vibe of any platform is usually dictated by its team, initial users, community guidelines, and a little bit of entropy.

Reddit’s community guidelines only consist of 8 rules, and encourage anything as long as it is lawful:

While not every community may be for you (and you may find some unrelatable or even offensive), no community should be used as a weapon.

Meanwhile, Youtube’s community guidelines boast almost 30 separate pages that describe the limitations of the platform in detail

I don’t think that either are superior—but these guidelines adequately correlate to the public’s perception of the platform. In fact, they are a core feature of each platform.

Ignoring morality, it is in a platform’s best interest to enforce these guidelines so as to preserve the sacred culture that has been fostered there. In this sense, it is reasonable that there is someone (or something) behind the scenes that decides fate of its users, as long as it aligns with the community guidelines.

The grey area

Things get tricky around grey area subjects like cyber-bullying, misinformation, and harmful content. In these cases it is up to someone to play god. To append their own morality onto the guidelines and make a decision that will not only affect the creator, but have trickle down effects to the rest of the platform users as well.

Trump was banned in the grey area. Andrew Tate was banned in the grey area.

I find it hard to agree that there is anyone all-knowing enough to play god.

Also, I’ve always wondered why companies think that banning a user is better than blocking/deleting the culprit videos themselves.

Misinformation

Before I go over some methodologies to fine tune our grey area models, I should clarify the meaning of misinformation in the context of social media.

If I were to tell you that a brown couch was brown, you might label it as a fact. If I were to tell you that the COVID vaccine is effective, some fewer of you may label it as a fact. If I were to tell you that coffee is healthy, some even fewer may believe it as a fact.

Factuality is non-binary because of two things:

- Infinite time

- Something new can always be proven/discovered on a long enough time horizon. It can just as easily be disproven.

- Humanity has no source of truth

- Reasearch papers can be fraudulent, and believe it or not, your mother may lie to you

This is the key argument behind social media allowing misinformation: that it might not be misinformation.

It is however, easy to get a probabilistic view on what things are factual enough based on heuristics like history, research, etc. This is great opportunity for AI to classify misinformation, although it is difficult.

Democracy in social media

Rather than corporate dictators deciding the fate of accounts and posts, social media uses a democratic process. Namely, engagement metrics. Post views, likes, comments, and shares are what play the voting system. The judge, also known as the algorithm decides the fate of all published content on a platform.

Leaving opinionated decisions to computers is a blameless and stardardized method to subjective matters. This is a mindset championed by many decentralization advocates. The real challenge here is designing great algorithms to make these decisions for us. This is also where computation ethics also play a role. Code can have bugs, misperform, or be malicious to begin with, just like people.